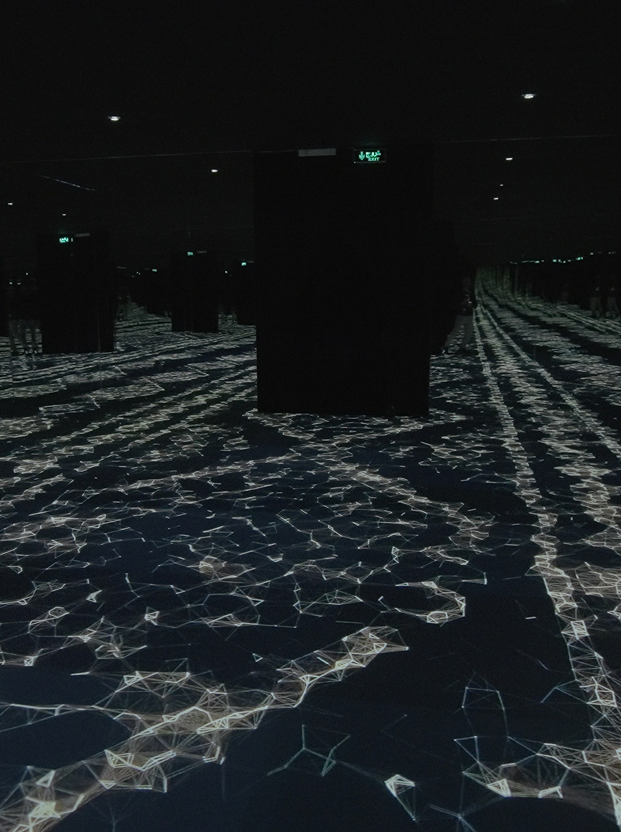

HW3: From Touch to Trigger — Interactive Sensors in Space

This session makes interactivity practical and artist-centered: participants compare sensor types, then build a working prototype that turns physical input into a clear visual response. The key emphasis is not just wiring, but interaction design fundamentals—thresholds, latency, feedback, and calibration for the room—so the experience reads quickly to audiences. Multiple build tracks allow the same learning goal using beginner-friendly camera motion or more advanced sensing setups.

Date:

Sun, Jan 2026

Time:

02:00 PM - 04:00 PM

Language:

Sun, Jan 2026

Riyadh, Jax district

Fees :

100

Learning goals

- Understand common sensor types and what they’re good/bad at.

- Convert raw sensor data into stable, usable signals.

- Map signals to visuals in a way audiences understand quickly.

- Calibrate interactions for real spatial conditions.

Learning outcomes

- Choose an appropriate sensor for a given interaction concept.

- Wire and test sensors feeding basic data into a system.

- Implement a basic signal chain: raw → filter → threshold → event/value mapping.

- Demonstrate an interaction prototype and explain its design logic (input → response → feedback).

Learning Details

- Duration: ~2h50 (170 min)

- Explore (10): prototype examples + case study reference

- Learn (35): Theory Module — sensors, signal conditioning, interaction design principles

- Create (110): Track A/B/C builds + calibration in the actual room

- Share (15): live demo + critique (responsiveness, legibility, meaning)

- Deliverable: [Working interaction prototype + calibration notes + interaction archetype statement]